SAILS Symposium

SAILS Symposium

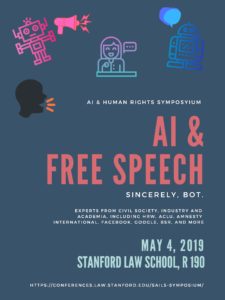

AI & Free Speech

ICT companies increasingly use automated systems to flag illegal or inappropriate content. Because the number of posts potentially linked to terrorist content, hate speech or fake news exceeds any human capability, AI has become common practice in content moderation. Yet automated systems are prone to mistakes and often remove content in error, including videos or posts documenting human rights abuses. Moreover, user’s newsfeed is increasingly determined by algorithms, shaping what content users are exposed to, and locking them into “echo chambers”. This is especially problematic in countries where social media platforms are the main source of information.

Surely, algorithmic prediction has the potential to prevent conflict. However, AI-based technology can also be used by authoritarian regimes to exacerbate censorship, monitor journalists, activists and political dissidents, and restrict people’s freedom of association by preventing online organizing. AI-enhanced surveillance, such as face recognition, can also have a chilling effect on free speech, as people opt to remain silent by fear of being targeted. Finally, the expansion and development of bot accounts risks increasing online harassment, typically targeting vulnerable people most.

Angèle Christin

Angèle Christin

Dunstan Allison-Hope

Dunstan Allison-Hope

Jeremy Gillula

Jeremy Gillula

Katie Joseff

Katie Joseff

Andy O’Connell

Andy O’Connell