SAILS Symposium

SAILS Symposium

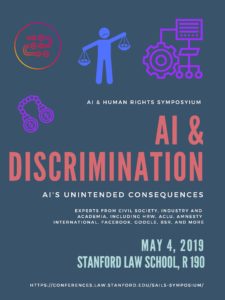

AI & Discrimination

What is the impact of deployment of AI algorithms in decision making by governments and private parties? Do AI-powered tools entrench or make human biases worse, or are they capable of eliminating human bias and replacing it with more equitable and inclusive decision making? Algorithmic decision-making can be fundamentally flawed because it’s trained on data that reflects societal biases, ultimately leading to discriminatory results. As AI-based systems increasingly infiltrate our daily lives, from banking and hiring to judicial sentencing, cases have exposed discrimination based on race and gender, among other things. What’s more, AI-enhanced surveillance systems disproportionately target minorities and put people’s privacy at risk.

Sonoo Thadaney-Israni

Sonoo Thadaney-Israni

Peter Eckersley

Peter Eckersley

Mehran Sahami

Mehran Sahami

Jamila Smith-Loud

Jamila Smith-Loud

Nicole A. Ozer

Nicole A. Ozer